10 Common Social Media Data Collection Challenges (And How to Avoid Them)

Collecting social media data sounds simple until it isn’t. Explore 10 real-world challenges teams face and practical ways to avoid blind spots.

Collecting social media data sounds simple. Pull numbers. Read charts. Make decisions. Except it never works that cleanly.

Every platform plays by its own rules. Metrics look familiar but behave differently. APIs change without warning. Data disappears, reappears, or tells half the story.

And you are left stitching screenshots, exports, and dashboards together, hoping nothing important slipped through the cracks.

Then there is scale. One brand. Ten platforms. Hundreds of posts. Thousands of signals to track, compare, and explain.

To make sense of this chaos, I spoke with Radhika Sarin, senior social media manager at Pure Storage. She shared how she navigates these data collection challenges in the real world and turns this data into important insights for their social media marketing strategies.

Key takeaways

-

What does social media data collection entail? Social media data collection covers everything that happens before analysis, from pulling raw platform signals to storing, standardizing, and preserving them so insights are accurate, comparable, and trustworthy over time.

-

What are some common challenges in social media data collection (and how to deal with them)? Social media data collection is complicated by platform limits, data quality issues, fragmentation, compliance risks, bias, missing competitor and historical data, inconsistent metrics, manual effort, and data overload—challenges that can only be managed through clear standards, automation, and context-driven analysis.

What does social media data collection entail?

Social media data collection is everything that happens before analysis even begins. It is the work of pulling raw signals from platforms and turning them into something usable, consistent, and reliable.

Here are the different types of data social media marketers need to collect:

- Account-level data: Follower growth, reach, impressions, profile visits, and audience demographics. This data shows how your presence is evolving over time and whether your audience is growing in the right direction.

- Content-level data: Post and video performance metrics like likes, comments, shares, saves, clicks, video views, and watch time. This is where you learn what formats, topics, and creatives truly connect.

- Campaign and time-based data: Performance by campaign, date range, posting frequency, or time of day. This layer adds context and helps teams compare launches, measure momentum, and spot patterns.

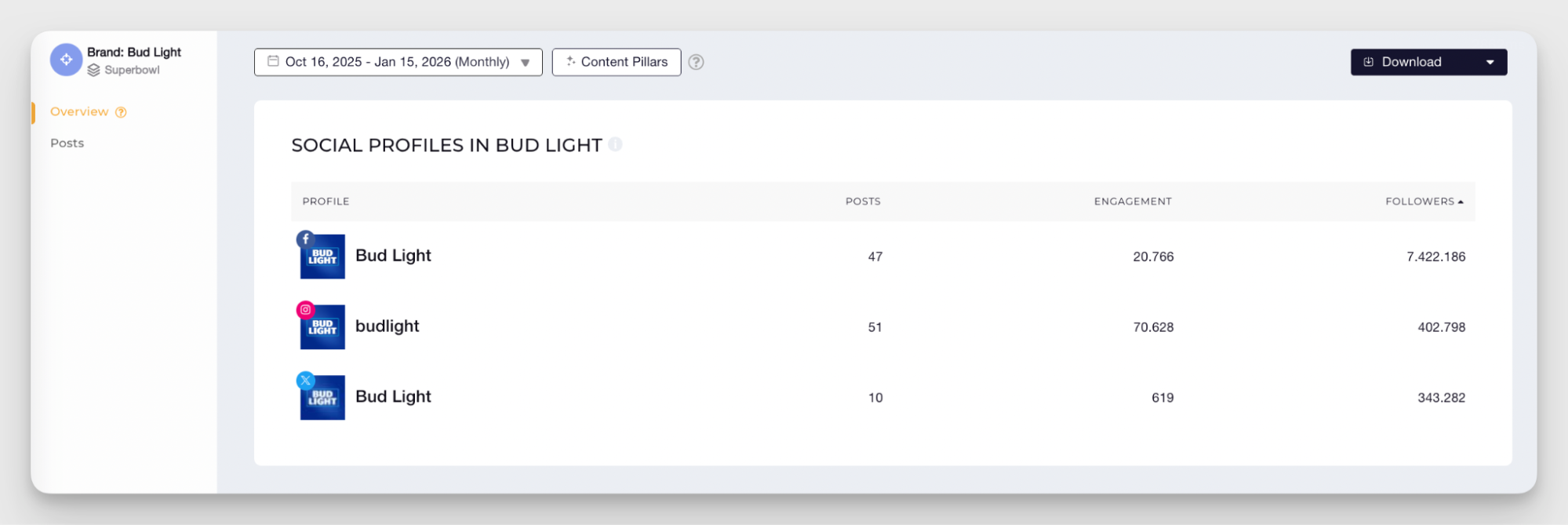

- Competitive data: Benchmarks, competitor growth, engagement rates, and share of voice. It answers the question every stakeholder asks. How are we doing compared to others?

All of this data comes with platform-specific definitions, formats, and limits. Collecting it also means storing historical data before platforms delete it, standardizing metrics, and preserving clean records teams can trust over time.

10 major challenges in social media data collection (and how to deal with them)

Here are common challenges of collecting social media data you may face and steps you can take to deal with them.

1. Platform limitations and API restrictions

Every social platform decides what data you get, how often you can pull it, and how deep that data goes.

Some metrics simply do not exist at an API level. Stories, Lives, and Shorts often come with partial or no data, especially for competitors.

Then there are platform-specific restrictions. Rate limits slow down large data pulls. Throttling kicks in when you scale. Certain metrics are only available for business accounts, verified profiles, or specific regions.

On top of that, APIs change and there are always a lot of social media API access limitations to take care of. Endpoints get deprecated. Fields disappear. What worked last quarter quietly breaks this one.

Radhika talked about the same social media data collection issues when she said —

API restrictions mean we’re often working with ‘best available’ data rather than a complete record. Rate limits and short historical windows push us toward periodic snapshots instead of continuous trend lines, which makes things like seasonality, long deal cycles, or crisis baselines harder to prove

The biggest gap is what we can’t see: audience‑level detail (seniority, company size, true reaction breakdowns) and, in some cases, post‑level audience drill‑downs on platforms like LinkedIn.

The result is fragmented datasets and invisible blind spots that surface only when someone asks a hard question.

How to deal with it?

- Centralize data using third-party tools: Tools like Socialinsider aggregate data across platforms and preserve it historically, reducing data loss.

- Be explicit about format coverage: Document which formats are included and call out known gaps such as Stories and Lives in competitive reporting.

- Set expectations before reporting starts: Align stakeholders early on what the data can and cannot show to avoid surprises later.

- Add qualitative context where numbers fall short: Use creative reviews, comment analysis, and manual checks to complement missing quantitative metrics.

2. Data quality issues

Even when data is technically available, it is not always reliable.

Social media datasets often arrive incomplete, with missing posts, partial metrics, or gaps caused by API limits and collection timing.

Duplicate content can creep in through cross-posting, reposts, or repeated imports, quietly inflating performance numbers.

Spam and bot-generated activity add another layer of noise, creating engagement spikes that look impressive but have no real impact.

On top of that, platforms format and define metrics differently, making side-by-side comparisons misleading if left unchecked. These are all social media data quality issues that may make your analysis go haywire.

How to deal with it?

- Document report coverage clearly: Define exactly which platforms, accounts, formats, and metrics are included so stakeholders understand what the data represents and what it does not.

- De-duplicate content during data cleaning: Identify reposts, cross-posted content, and duplicate imports to prevent double counting and distorted performance trends.

- Flag and investigate abnormal spikes: Remove or annotate engagement surges that do not align with historical patterns, posting activity, or audience behavior.

- Prioritize higher-signal engagement metrics: Focus analysis on comments, saves, and shares, which are harder to game and more indicative of real audience interest.

- Avoid forced cross-platform comparisons: Keep platform-specific metrics separate when definitions differ, instead of merging them into misleading totals.

- Normalize performance with ratios: Use metrics like engagement rate or interactions per follower to enable fairer comparisons across platforms and account sizes.

3. Data fragmentation across tools and teams

You open three dashboards before your first meeting. Native platform analytics for quick checks. A scheduling tool for post-level data. A separate analytics platform for monthly reporting. By the time you reach the slide with ‘final numbers,’ you already know someone will ask why they look different elsewhere.

Social media data rarely lives in one place. It is spread across tools, exports, internal dashboards, and spreadsheets. Each source applies its own rules, time ranges, and metric definitions.

The result is conflicting numbers, extra reconciliation work, and reporting conversations that focus on validation instead of insight.

How to deal with it?

- Consolidate data in a single platform: Use one tool like Socialinsider to collect and standardize cross-platform data, reducing discrepancies caused by multiple sources.

- Define a clear source of truth: Decide which tool or dataset owns official reporting numbers and ensure every team references it consistently.

- Align teams on metrics before reporting starts: Agree upfront on which metrics matter, how they are calculated, and which ones are out of scope to avoid last-minute debates and rework.

4. Privacy, compliance, and legal challenges

This one usually shows up quietly. You pull a dataset, everything looks fine, and then someone asks a simple question — Are we actually allowed to use this? That is when things get uncomfortable.

Social media data collection privacy compliance sits under real legal frameworks like GDPR and CCPA, along with each platform’s own terms of service. Not all data is fair game.

Public metrics are generally accessible, while private data, personal identifiers, and anything behind permissions come with strict boundaries. Consent matters and so does how and where the data is stored. Ignoring these rules bring fines. But it also risks trust, both with users and stakeholders.

Here’s what Radhika had to say about this challenge —

Some common missteps I have seen include scraping or storing personally identifiable information against platform terms, keeping data for far longer than any policy allows, and moving data across regions without the right safeguards. Cross‑border compliance needs to be examined in detail; regulations and enforcement differ across countries, tools, and data flows, so what’s acceptable in one context may not automatically apply in another.

Compliance around social media data can easily be over‑corrected or under‑corrected, and both extremes distort touchpoints and attribution signals, leading to misleading views of what’s actually working at each stage of the funnel.

How to deal with it?

to deal with it?

- Use tools with transparent data practices: Choose platforms that clearly document how data is collected, processed, and stored, and align with regulations like GDPR and CCPA.

- Avoid scraping and unofficial workarounds: If a platform does not provide a metric through official APIs, treat it as off-limits instead of finding creative shortcuts.

- Define data boundaries clearly: Document which data types are excluded from reporting, such as private profiles, DMs, or user-level identifiers.

- Make consent explicit and traceable: For owned accounts and campaigns, ensure consent mechanisms are clear, documented, and easy to audit.

- Train teams on compliance basics: Regularly educate teams on data ethics, privacy rules, and platform policies.

5. Data collection bias and accuracy problems

Even accurate data can point you in the wrong direction when bias creeps in. Here are some common biases you need to look out for:

- Sampling bias: Social data often reflects only active or highly engaged users, not the broader audience that scrolls, watches, and never interacts.

- Platform bias: Each platform attracts different demographics and behaviors, which means performance on one network cannot be assumed to translate to another.

- Algorithmic bias: Algorithms decide which content gets visibility, amplifying certain posts while quietly suppressing others regardless of quality.

- Temporal bias: The timing of data collection matters. Launch periods, holidays, trends, or viral moments can skew results if taken out of context.

How to deal with it?

- Focus on trends instead of individual data points: Look for consistent movement across weeks or months rather than reacting to one spike or dip that may be driven by algorithms or timing.

For example, I use Socialinsider to see the evolution of each metric over time. I can even click on the upticks or downfalls to see which posts resulted in them.

- Separate analysis by content format: Evaluate Reels against Reels and carousels against carousels so format-driven visibility differences do not distort insights.

- Standardize reporting windows across teams: Use the same time ranges for all reports to reduce noise caused by seasonal or event-driven spikes.

- Document data limitations and assumptions: Call out platform limitations, algorithmic influence, and sampling gaps so decisions are made with full context.

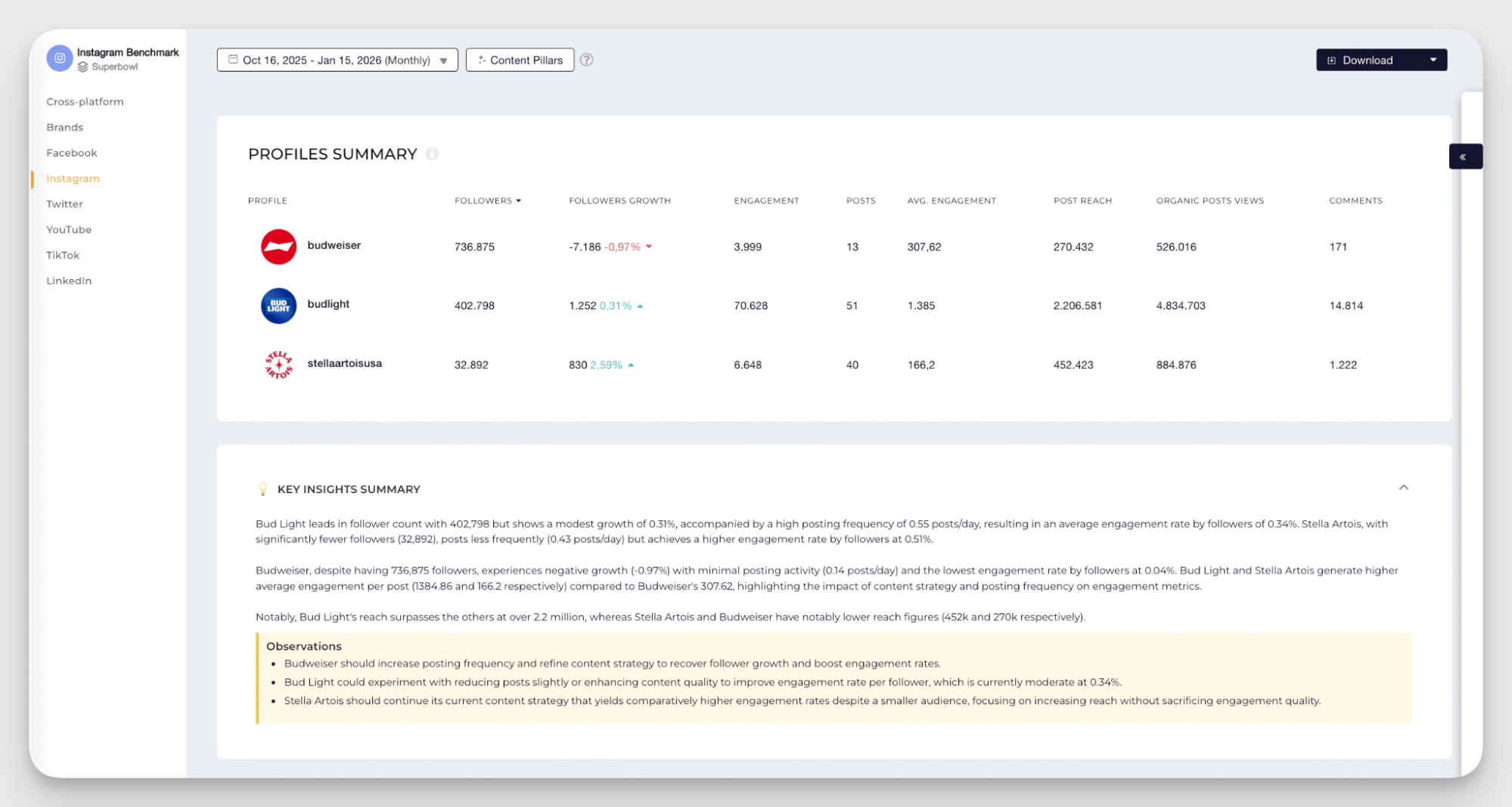

6. Limited access to competitor data

One of our customers mentioned their biggest reason to adopt Socialinsider — “I needed something I could actually show leadership and say, ‘This is what’s happening in our space.”

The truth is native analytics can give you a decent picture of your page’s performance. But you can only look at the wider picture like the number of likes and comments on your competitor’s account.

Some of the common challenges I faced before using Socialinsider were:

- No historical view of competitor performance: Most tools do not store competitor data over time, making trend analysis nearly impossible.

- No access to competitor reach or impressions: Visibility is limited to public engagement metrics, leaving major context gaps.

- Manual tracking workflows: Teams resort to screenshots, spreadsheets, and one-off snapshots that do not scale or stay consistent.

How to deal with it?

- Use competitive analytics tools built for public data: Platforms like Socialinsider track public competitor performance over time, making it easier to show trends instead of isolated snapshots.

You can even get a side-by-side metric comparison and observations on what you can improve moving forward.

- Consider competitor data in context: Radhika talked about considering not just numbers but taking other qualitative data to get the true picture of what’s happening in the industry.

She said:

The most reliable competitive signals show up consistently over time, like changes in posting cadence, content formats, campaign activity, and hiring patterns. Metrics such as engagement, followers, or sentiment need to be read in context and validated against launches, partnerships, analyst coverage, and pipeline signals. Any single metric, viewed on its own, can be misleading.

7. Overcoming historical data loss and gaps

You are trying to answer a simple question — Is performance improving or just fluctuating? You open the dashboard and realize the data only goes back a few months. The campaign you care about launched last year. The context is gone.

Most social platforms limit how far back data is accessible unless it has been stored externally. If a tool was not connected early enough, that history cannot be recovered.

When teams switch tools or ownership changes hands, continuity breaks even further. What you end up with is a short window of visibility that makes long-term trends, seasonality, and progress hard to explain.

This means you’re left answering questions you don’t have the data for. How did we perform as compared to a year ago? What were the results of a similar campaign a couple years ago?

How to deal with it?

- Rebuild trends using historical estimates where possible: Tools like Socialinsider provide estimated historical data for metrics such as follower growth, helping recreate long-term direction.

- Create baseline snapshots during onboarding: Capture current performance when new tools or team members come in so future reporting has a clear reference point.

- Preserve historical context centrally: Store exports, benchmarks, and key reports in shared documentation to survive tool changes.

- Start collecting earlier than feels necessary: Historical data only matters once it is gone. Planning ahead avoids that scramble later.

8. Inconsistent metrics across platforms

Radhika talked about how metrics can mean different things across platforms. She said:

The language looks consistent across platforms, but the math underneath is not. Impressions, reach, and engagement are calculated differently, video views are counted at different thresholds, and click metrics often bundle multiple actions under a single label. Without normalizing these differences, cross-platform comparisons can be misleading.

For example, impressions on LinkedIn and TikTok are not counted the same way. Engagement might include clicks and saves on one platform and exclude them on another. Video views depend on time thresholds that range from autoplay to a few seconds to partial completion.

When these numbers are stacked side by side, they look comparable, but they are not measuring the same behavior. That is how well-intentioned cross-platform reports turn misleading without anyone realizing it.

How to deal with it?

- Standardize metric definitions internally: Decide what each core metric means in your reports. For example, define exactly which actions count toward engagement and apply that logic consistently, even if platforms label things differently.

- Report with context: Avoid mixing absolute numbers like impressions or views across platforms. Instead, explain what each metric represents and why it is being used.

- Use platform-aware comparisons: Compare performance within the same platform first, then layer cross-platform insights only where definitions reasonably align.

- Document metric assumptions clearly: Add notes to reports that explain calculation differences so stakeholders understand the limits of comparison.

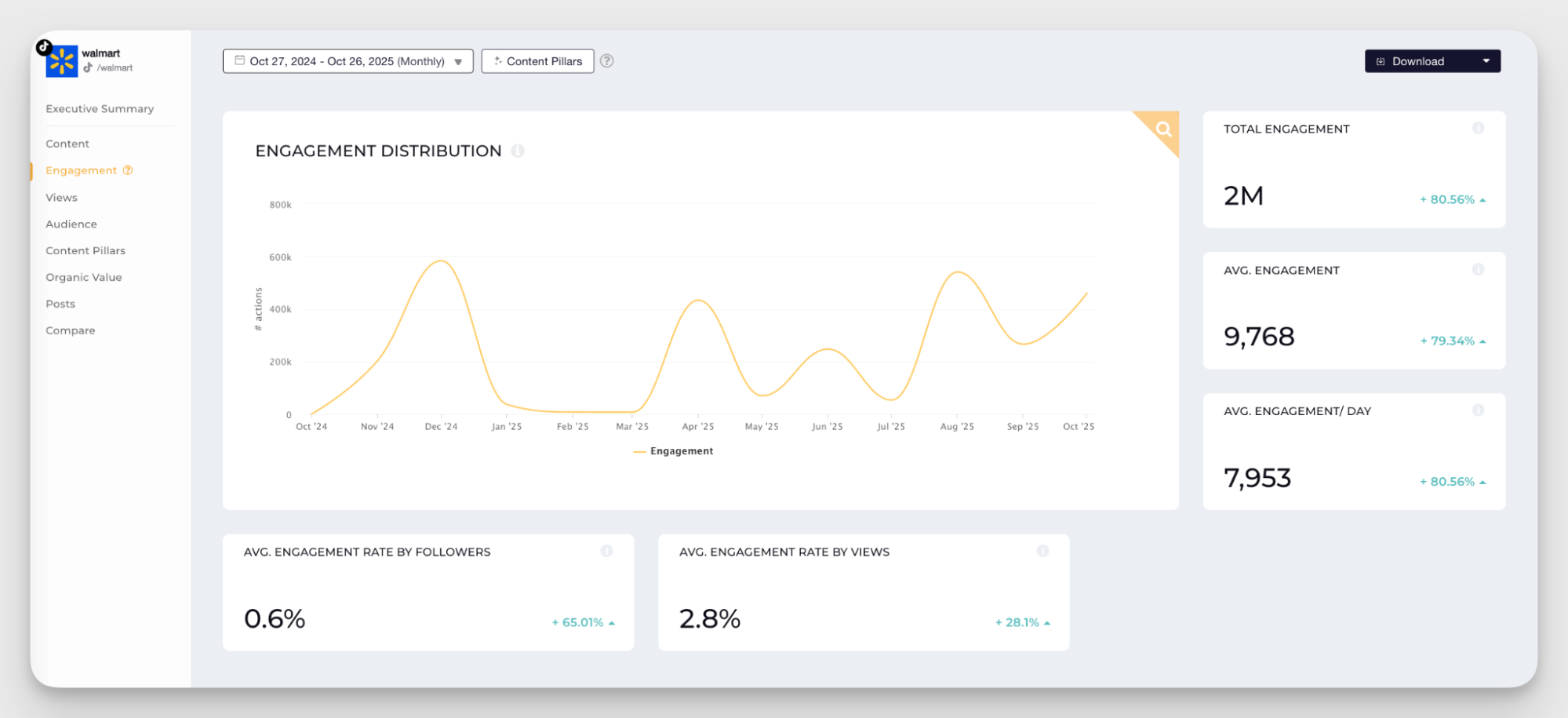

9. Overcoming time, scale, and manual effort

I have watched smart teams spend hours every month doing work that adds zero strategic value. Screenshots taken on the same day every month. Spreadsheets cleaned line by line. Metrics copied and pasted into decks, hoping nothing breaks along the way.

Truth be told, it does work when volume is low. As soon as accounts, regions, or competitors increase, the process collapses under its own weight.

Radhika mentioned the same in our conversation.

Manual data collection works when the footprint is small and the questions are simple. It breaks down at scale, when teams need consistent historical views, real-time responsiveness, and the ability to connect social signals to business outcomes. At that point, the real cost is not time spent in spreadsheets, but inconsistent data, missed signals, and slower decisions. To do serious global social listening, and attribution work, you eventually need automation, integration, and clear data standards. Otherwise the analytics side can’t keep up with the scale of the activity.

How to deal with it?

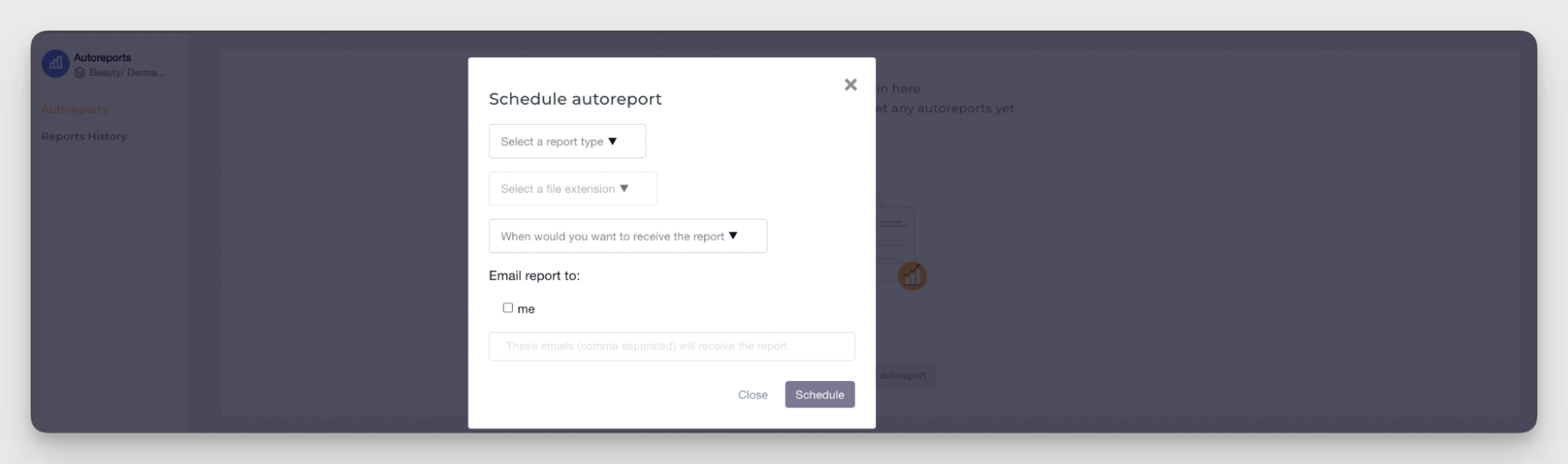

- Automate recurring reports: Set up scheduled reports that land in your inbox every week/month/quarter, removing the need for monthly manual reporting. Socialinsider helps you do this easily with its Autoreports feature.

- Automate collection and insight generation: Use tools like Socialinsider to automate data collection and even surface insights through features like its Socialinsider AI assistant. You can ask questions to this assistant to get information and insights for your and competitor’s profiles.

- Standardize reviews with templates: Create monthly and quarterly templates so teams focus on interpretation and decisions instead of formatting and data cleanup.

10. Issues with big data

What happens when your data moves faster than your ability to make sense of it? The dashboard keeps updating, but the insight never quite lands.

At scale, social media data stops being scarce and starts being overwhelming. Metrics change by the minute. By the time a report is shared, the moment it was meant to explain has already passed.

I have also seen spikes grabbing attention, viral moments stealing focus, and teams ending up reacting instead of thinking.

How to deal with it?

- Compare performance period over period: Focus on week-over-week or month-over-month trends to understand direction and momentum, rather than reacting to live fluctuations.

- Flag anomalies without centering analysis around them: Call out spikes or drops as context, but ground insights in sustained patterns that reflect real behavior.

- Use rolling averages for stability: Apply rolling averages to smooth volatility in large datasets and surface meaningful trends.

- Sample data intentionally when volume is high: For massive datasets, sampling helps teams spot patterns faster without getting overwhelmed by a lot of data.

Final thoughts

Collecting social media data is really about getting the basics right. Clear definitions, consistent timelines, and systems that hold up as your accounts, platforms, and questions grow. When data is messy or biased, teams end up second-guessing numbers instead of using them.

The fix starts with better foundations. Standardized metrics, reliable historical tracking, and tools that respect platform rules and privacy.

If you want to collect social media data with compliance and less manual effort, Socialinsider is a solid place to start. Start your 14-day free trial to see your owned and competitor data in one place, spot trends over time, and make decisions without worrying about historical gaps.

Analyze your competitors in seconds

Track & analyze your competitors and get top social media metrics and more!

You might also like

Improve your social media strategy with Socialinsider!

Use in-depth data to measure your social accounts’ performance, analyze competitors, and gain insights to improve your strategy.